What Happened at Capital One?

There have been many words written about the Capital One breach – but a lot of them didn’t explain what actually happened. We care a lot about security in general, and cloud security in specific, so Josh set out to find some words that did explain what happened:

- What We Can Learn from the Capital One Hack (krebsonsecurity.com)

- Capital One Data Breach: What You Can Do Following the Bank Hack (CNET)

- The Capital One breach is more complicated than it looks (theverge.com)

- The Technical Side of the Capital One AWS Security Breach (jcolemorrison.com)

- Capital One’s Official Words

The Krebs article might be the best for this. However, as far as we could tell, no one’s tackled it from a “what can enterprises learn from this?” standpoint, and…that’s what we really care about.

TL;DR: The Event

A hacker named Erratic, who was a former AWS employee, took the following actions:

- Owned the Web Application Firewall (WAF) on Capital One’s Amazon Web Services (AWS) Virtual Private Cluster (VPC)

- Used the credentials of the WAF to connect to other AWS resources in the VPC, including their storage objects (S3 Object Stores)

- Synced (copied) the object store to her own storage

“With this one trick, you can get 100M Credit Card Numbers! The secret THEY don’t want you to know!”

– Best ClickBait Ad Ever

…ELI5: The Event

So…there’s a lot about the mechanics of this that’s unclear. But we can explain what seems to be widely accepted as fact. First, some definitions:

- A Web Application Firewall (WAF) is basically an entry point into a system – it isn’t intended to be entry, though, it’s intended to be a layer of defense.

- AWS is Amazon’s public cloud.

- A virtual private cluster (VPC) is a cordoned-off part of a cloud – so, it was an area of AWS that was specifically for Capital One.

So…

- Somehow the hacker Erratic was able to log in to one of Capital One’s WAF.

- From there, she got to their storage objects that represented information about people – specifically, people who had used the business credit card application…application. Overloaded words are the best!

- Finally, she copied those storage objects that represented people to her own area of AWS – like copying a file from someone else’s Google Drive into your Google Drive.

Questions Outstanding

…there are a lot.

It’s not clear to how Erratic did #1, logging in to the WAF. The most likely answer is that the username/password was something not complicated enough – like maybe the default of admin/admin. But there are also other possibilities, and if Capital One has disclosed this piece, we couldn’t find it.

There are a few ways step #2 could have happened – the WAF could have already had access to all of the storage objects, or Erratic could have given the WAF direct access to the storage objects. The J Cole Morrison article above explained one possible scenario: Amazon IAM could have been used to take advantage of the fact that she was already in the WAF and then extended the default trust of “well, you’re in the WAF, so okay” – security people call this a “pivot”.

Step #3 is basically…copy/paste. There are probably some interesting nuances here, like…if she didn’t give the WAF authority to read the objects, why did the WAF have the authority? What business use case would require giving an access point read access to an entire store of customer data? Also she would have had to have given something access to write to her own AWS space, at least temporarily.

The Pain: $100M-$150M

The Capital One press release stated that this incident will “generate incremental costs of approximately $100 to $150 million in 2019.” Capital One was one of the earliest companies to go to AWS/the cloud, and they made a lot of noise about it – here, and here. Explaining technology success is one of our favorite things, but there are trade offs if you could otherwise manage to keep your backing infrastructure a secret.

This has lead to egg on AWS’s and Capital One’s faces, which is unfortunate, because this really doesn’t have much to specifically do with AWS or clouds in general….

…or does it?

– Not Intended to be ClickBait

Clouds in General

This isn’t the first AWS data breach (see end of the blog for a list of others). The list is not small, unfortunately.

Please raise your hand if you are sure you haven’t been hacked?

We’re gonna say this is partially because AWS is the biggest, been around the longest, and had to figure out hyperscale stuffs without anyone to copy from because they were the first.

But still… yikes.

A big part of this is that Amazon makes things super easy. So easy a caveman could do it, right? And…that’s the trick. It’s super easy to type in a credit card (or even an AWS gift card, I (Josh) have one they gave out at a trade show) and spin up some storage and compute. Unfortunately, it isn’t super easy to spin up security tailored to clouds.

We used to have to wait for infrastructure teams in our data centers to (hopefully) handle the security for us. They’d put your request in a queue, and get to it a week later…then they’d ask the storage admins and VM admins for some data and some compute, and that request would go into a queue…and then, several steps later, the firewall admins would get involved…but doggone it, eventually the pros would secure things like they were trained.

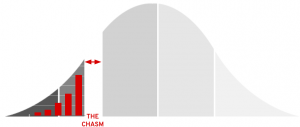

VM-based infrastructure has been around a long time, and the kinks have been worked out. Cloud infrastructure is newer, and exponentially faster to use – that’s one of the biggest appeals. Unfortunately, because it’s newer and because it’s so fast, kinks still exist – and one of the biggest is how to make it secure without slowing down the people using it.

Clouds are not all insecure, the sky is not falling – but they do require more deliberate attention to security than perhaps we’re used to in most of IT.

Takeaways and Recommendations

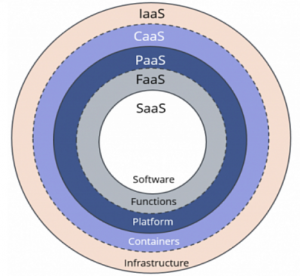

With Infrastructure as a Service that’s as fast and easy as cloud-based, it’s clear that there are often times when the right security-aware folks are not involved. It’s extremely easy to get going with platforms like these, which is…kind of the point. Simply put, you can get insecure systems faster and easier than you can get secure systems – for now, anyway. The industry knows this, and is trying to make it better.

Until security catches up to the speed of IaaS, companies need people who can secure their systems to be involved in setting up new platforms, and setting up best practices for their use. The balance point of that is not removing too much of the speed and agility gains of advances like IaaS because of security – ideally security should be something that everyone agrees is worth the trade.

So…after all of that, here are some recommendations:

- Single layers of security are not enough. You need Defense in Depth, and vital areas like customer data need to be strongly protected regardless of the platform trying to access them.

- Security practices and implementations should be transparent, at least within a company, and questions should be welcomed and encouraged. Open culture helps with security, too.

- Security should be automated as much as possible, and that automation should also be transparent (infrastructure as code).

- Enterprises need to choose platforms that are secure, that have people dedicated to the security of that platform as their job.

Other AWS Data Breaches

- https://www.csoonline.com/article/3018592/database-configuration-issues-expose-191-million-voter-records.html

- https://www.csoonline.com/article/3060778/personal-info-of-all-94-3-million-mexican-voters-publicly-exposed-on-amazon.html

- https://www.msspalert.com/cybersecurity-breaches-and-attacks/dow-jones-amazon-aws-cloud-data-leak/

- https://www.healthcareitnews.com/news/accenture-latest-breach-client-data-due-misconfigured-aws-server

- https://www.theregister.co.uk/2017/09/05/twc_loses_4m_customer_records/https://www.bloomberg.com/news/articles/2017-11-21/uber-concealed-cyberattack-that-exposed-57-million-people-s-data