Master Data Management Rant

Foreword by Laine:

If you’ll recall our post entitled, Go: a Grumpy Old Developer’s Review, you might remember that sometimes Josh goes on legitimately amazing rants about technology and architecture. HERE IS ONE, YOU ARE ALL WELCOME.

What is Master Data Management?

“Master data management (MDM) is a method used to define and manage the critical data of an organization to provide, with data integration, a single point of reference.”

In other words, MDM tries to create a standard database schema loaded with uniform, processed, “cleaned” data. The data is easy to query, analyze, and use for all application operations. Sounds great!

Most business have a lot of data – and if they could access that data accurately, reliably, and rapidly, it would give them a lot of insight into what their world looks like and how it’s changing. They could unify their understanding of themselves, their customers, and their partners, and become more agile (agile as in, “able to change directions quickly in response to changing conditions,” not Agile as in the development methodology).

MDM is sold as a silver bullet that will enable this master view of data, this easy querying, and this agility. But I haven’t seen that actually happen very often.

MDM Kills Agility

MDM is a tool of consistency – and consistency forces things to exist in specific ways. The real problem with MDM is then reflected when you consider that the data of a business is like the mind of the business. Imagine if your mind could no longer consider something to be valid input unless it had seen it before – as in, you could understand when you found a new variety of orange, but if you had never seen a starfruit before, you literally could not comprehend it. As one of my colleagues said,

“Building a gold data model is like nailing jello to a tree.”

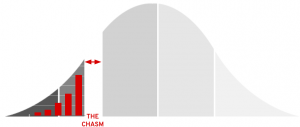

MDM in its traditional, monolith definition, kills agility. Basically, it’s building a perfect utopia in which all changes have to be agreed on by everyone, and no one can move in until it’s perfect, and then no one can change ever again. Our job as technologists is not to stagnate – it’s to “deliver business value at the speed of business” (Gitlab). Businesses need to move fast, and to do that they must be able to adapt – and if IT systems don’t adapt, then IT systems slow the business down.

I’ve come across multi-year MDM projects full of ETL and data standardization meetings – and the business is finding data points that matter faster than they can be standardized. An MDM initiative that can’t move as fast as every other part of the business just slows it down, eats resources, and eventual dies a dusty death of forgottenness.

A Possible Solution: Jump-Start with a Purchased Model!

Often companies will sell a partial model of the business’s data that can be adopted more rapidly, which is typically “industry-standard” data – with claims that this will speed time to market for a MDM system. But it doesn’t.

Every organization sees the world slightly differently. This is a good thing. Individual divisions and teams within each organization will also each see the world differently. These different views mean different schemas.

Trying to fit everyone into one data model is like trying to make everyone speak exactly the same English, with no slang, no variations in tone or phrasing, and definitely no new words, connections, or ideas.

The perspective of a business, or any group, changes as the group learns and grows. Locking yourself into an old perception, or attempting to standardize via a process that takes years, is intentionally slowing down your business’s rate of adaptation and growth.

Also, it sets you up for years of arguments between teams that their view of the data – and by extension the world – is correct.

A Recommendation: Agility in Data Access Models, Not Data Storage Models

The need to have some kind of standardization so that a business’s data is useful is real. What we have seen work is more of a blended approach: spend 20% of the effort on making the data sane, and 80% of the effort on providing clear, accurate, scalable data access via APIs, in-memory databases, and occasionally Operational Data Stores (ODS). You can click on the links to learn more about each of those tools/approaches, but the basic idea is to leave the data where it is, in the format that makes sense for the team in charge of it, but provides access and views that make the data usable.

Leave the data where it is, in the format that makes sense for the team in charge of it, but provides access and views that make the data usable.

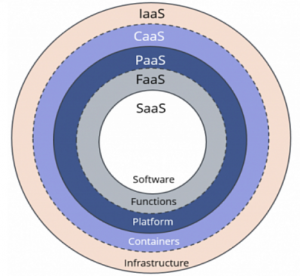

Microservices with versioned API’s, backed by legacy databases, implemented via request/response or pub/sub application communication models, are the easiest application EVAR. It’s simple to spin them up and scale them using containers and OpenShift. Using this approach, you can provide multiple data views of the data, and add more as new connections and ways of thinking appear.

If you need to do your own analytics or heavy-duty data processing/lifting, you can use a temporary or semi-permanent (but not the source of truth) data store such as an in-memory database or an ODS. Again, these are faster to set up and and more importantly faster to change than a legacy system of record, and they provide a nice balance between the speed of APIs and the performance of an enterprise database.

Conclusion: MDMs Generally Suck (Relative to Alternatives)

I would love to be wrong. I’d love to hear some new innovation that makes MDM make sense. But I’ve seen too many MDM initiatives rust out and die, and I’ve seen way too many API projects succeed wildly.

Don’t MDM, API.

So you’re a Java/C++/web developer and you’ve heard about all of these “cloud native” technologies, and containers, and you’re wondering “excuse me, what is a Kubernetes please, and why do I care?”

So you’re a Java/C++/web developer and you’ve heard about all of these “cloud native” technologies, and containers, and you’re wondering “excuse me, what is a Kubernetes please, and why do I care?”